Enterprise AI has entered a phase of accelerated adoption and strategic scrutiny.

“Should we implement AI?” to “How do we scale it, securely, intelligently, and sustainably?”

According to Gartner, more than 80% of enterprises will have deployed generative AI applications by 2026, yet measurable business impact remains inconsistent across industries.

The reason is that enterprises deploy advanced models, but without an orchestration architecture that enables true enterprise AI integration, they end up with fragmented systems, siloed data flows, disjointed workflows, and lapses in enterprise AI governance.

LLM integration with enterprise systems and vector databases now underpins a growing share of enterprise knowledge retrieval, automation, and decision intelligence. What organizations require is a model-neutral, database-independent orchestration framework: a true AI orchestration platform for enterprises that allows them to plug in any model or vector backend with data, workflows, and governance baked in.

GenE, an AI automation and orchestration platform, provides the architectural foundation for LLM and Vector DB Agnostic Orchestration, enabling unified integration, real-time automation, and governed scalability.

In this blog, let’s explore how GenE operationalizes this paradigm of AI workflow orchestration, what it means for enterprise digital transformation, and why it represents a critical inflection point in enterprise AI strategy.

The AI landscape in Enterprises

Rapid rise of GenAI and LLMs

Large-language models (LLMs) such as GPT 4, Claude, Llama, etc., have become central to how businesses think about AI. They power natural-language generation, summarisation, reasoning, conversational agents, and more. But for enterprises’ AI integration, several challenges surface:

- Context limits, hallucinations, and domain-specificity. LLMs often lack real-time knowledge, struggle with multi-step reasoning without orchestration. (IBM)

- Multiple LLM providers, each with their own APIs, pricing models and licensing agreements, creating risk of vendor-lock-in, and complicating LLM integration with enterprise systems. (getorchestra.io)

- The need to combine LLMs with retrieval (via vector search) and real-world enterprise data (structured/unstructured) rather than relying purely on pre-trained knowledge. (InterSystems Corporation)

The explosion of vector databases and retrieval architectures

To enable retrieval-augmented generation (RAG) and semantic search, enterprises increasingly deploy vector databases (Vector DBs), systems that store “embeddings” of text, documents, or other data and allow similarity search. Examples: Milvus (open-source), Chroma, and others.

Key needs:

- Ingesting enterprise data (documents, knowledge bases, CRM, ERP, etc) → embedding → vector store → retrieval at runtime.

- Ensuring freshness, governance, multi-modal retrieval (text + images + graphs), and integration with the LLM layer.

The integration challenge- enterprise systems, workflows & data silos

Enterprises are rarely green fields. They have ERP systems (e.g., SAP), CRM (Salesforce), knowledge bases, data lakes, BI platforms, legacy applications, and organizational processes with compliance, audit, governance, teams, and SLAs.

Simply slotting in an LLM or vector DB won’t suffice. The key issues:

- How to orchestrate workflows that span business systems + LLM + vector DB + analytics?

- How to ensure governance, audit trails, versioning, scaling, and model monitoring?

- How to avoid vendor-lock in one LLM or vector DB, given how fast the landscape changes?

- How to deliver real business value (automation, insight, decision support) rather than siloed pilot projects? For example, a company may have many AI/ML pilots, but 95% fail to deliver business results according to recent enterprise surveys.

What is LLM & Vector DB Agnostic Orchestration?

LLM and Vector DB Agnostic Orchestration refers to an integration framework that enables enterprises to connect, coordinate, and control diverse AI components without being constrained by a single model or database provider.

LLM-agnostic orchestration means the platform supports multiple large language models, such as GPT, Claude, or Llama, allowing organizations to switch or combine them based on use case or performance needs.

Similarly, Vector DB-agnostic orchestration ensures compatibility with various vector databases like Milvus, Pinecone, or Chroma, as well as hybrid retrieval systems, enabling flexible and efficient data access.

The orchestration layer acts as the enterprise control plane, coordinating workflows, managing model calls, integrating business logic, ensuring governance, and maintaining visibility across systems.

Why does this matter? Enterprise AI ecosystems evolve rapidly.

Agnostic orchestration delivers future-proof flexibility, eliminates vendor lock-in, and enables best-fit model selection for each use case. It also enhances operational efficiency through unified monitoring, scaling, and versioning.

Most importantly, it enforces governance and auditability, ensuring every model, agent, and workflow function cohesively and compliantly, transforming fragmented AI components into a seamless, intelligent enterprise system.

Key components of LLM & Vector DB agnostic orchestration

| Component | Purpose |

| Integration Layer (APIs / Connectors) | Establishes connectivity between enterprise systems such as ERP, CRM, and data lakes with the orchestration framework, enabling seamless data and process integration. |

| Retrieval Engine / Vector Store Connector | Ingests structured and unstructured data, embeds and indexes it, and retrieves context through vector or hybrid search to power intelligent responses. |

| LLM Invocation Layer | Supports multiple language models and providers, managing prompt templates, chaining logic, agent collaboration, and external tool calls for adaptive AI workflows. |

| Workflow Engine / Orchestration Logic | Defines and manages end-to-end AI pipelines covering query, retrieval, prompt generation, response creation, post-processing, and system updates, while handling branching, errors, and multi-agent coordination. |

| Monitoring, Observability & Governance | Tracks system and model performance, latency, and data drift; maintains detailed audit trails and version control for transparency and regulatory compliance. |

| UI / Orchestration Dashboard | Provides an interactive interface for AI operations and business teams to visualize workflows, switch between models, select vector databases, and inspect logs in real time. |

| Security, Compliance & Scalability Infrastructure | Ensures secure data flow through encryption, access control, and compliance policies; supports hybrid or on-premise deployment with autoscaling and failover mechanisms for enterprise reliability. |

Challenges in Enterprise AI that orchestration solves

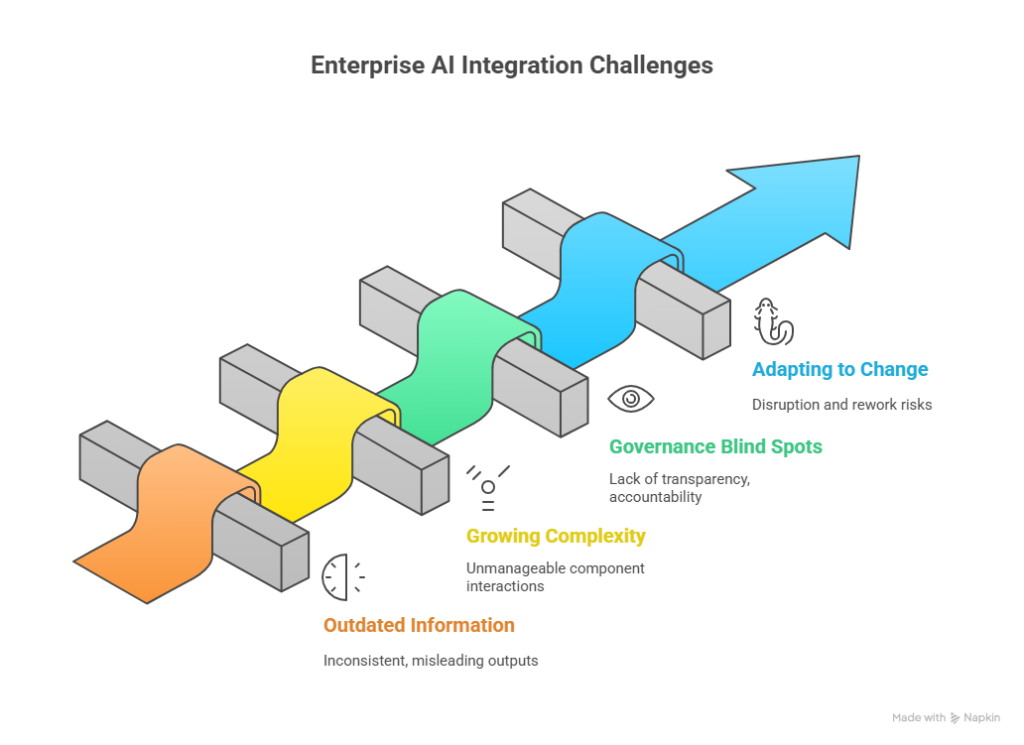

As enterprises race to integrate generative AI into business operations, many encounter a familiar set of obstacles: fragmented pilots, unreliable outputs, operational sprawl, and governance blind spots. LLM and Vector DB Agnostic Orchestration addresses these issues head-on, enabling organizations to transition from scattered experiments to enterprise-grade, scalable AI ecosystems.

From Siloed Pilots to Scalable AI

Many enterprises have experimented with LLMs or semantic search in small, contained pilots. These efforts often deliver local success but fail to integrate with enterprise workflows, data pipelines, or compliance processes. Over time, this creates isolated systems, useful in silos, but impossible to scale. Orchestration provides the framework to connect these experiments into the broader enterprise environment, turning innovation into operational capability.

Outdated Information and Unreliable Outputs

Without continuous access to enterprise data, language models risk relying on outdated or incomplete information. The result is inconsistent or misleading outputs that erode trust. By combining vector-based retrieval with orchestration, enterprises ensure that every response draws from the most current, contextually relevant data, keeping insights reliable and actionable.

Growing Complexity in AI Operations

Modern enterprise AI ecosystems often include multiple models, databases, and intelligent agents. Managing how these components interact, how tasks are routed, results validated, and processes monitored, can quickly become unmanageable. Orchestration introduces structure and control, coordinating workflows end-to-end and maintaining consistency as the ecosystem expands.

Governance and Accountability

Regulated industries, in particular, demand transparency around how automated decisions are made. Orchestration embeds governance into the process itself, recording which model was used, what data informed a decision, and who approved it. This built-in traceability ensures compliance, mitigates bias, and provides confidence in automated outcomes, enabling AI governance.

Adapting to Constantly Changing Tools

The AI landscape shifts rapidly: new models, new vector databases, new pricing models. Without orchestration, each change risks disruption and rework. An agnostic orchestration layer absorbs this volatility, allowing enterprises to integrate new technologies without rebuilding from scratch, preserving flexibility while staying aligned with emerging innovation.

GenE: The AI Orchestration and Automation Platform for Enterprises

GenE is a purpose-built AI orchestration platform for enterprises engineered to bring together LLMs, vector databases, and enterprise systems into a single, coordinated ecosystem. It enables organizations to design and execute end-to-end workflows, connecting data ingestion, retrieval, model invocation, and system updates within one governed, scalable framework.

At its core, GenE delivers LLM and Vector DB Agnostic Orchestration, ensuring that enterprises are never confined to one model, database, or provider. The platform is designed to enable seamless enterprise AI integration, LLM integration with enterprise systems, and AI workflow automation.

GenE’s Core Value Proposition

LLM-Agnostic Design

GenE supports a wide range of large language models from industry leaders like GPT, Claude, and Gemini to open-source models such as Llama or Mistral. Enterprises can plug in any model, combine them for specific use cases, or switch providers as needs evolve without rewriting workflows or disrupting operations.

Vector DB-Agnostic Integration

Vector databases are critical for contextual search and retrieval. GenE supports multiple vector database backends, such as Pinecone, Milvus, Weaviate, and Chroma, and enables hybrid retrieval architectures. This ensures enterprises can choose or replace vector stores based on performance, compliance, or cost considerations, all without changing the orchestration logic.

Enterprise Workflow Orchestration

Beyond connecting models and data, GenE orchestrates complete business workflows. It manages every stage from data ingestion and preprocessing to retrieval, generation, and integration with enterprise systems like ERP, CRM, or BI tools.

The result is a unified AI pipeline that automates not just responses but real business actions such as updating customer records, generating reports, or triggering notifications in downstream systems.

Governance and Compliance Built In

GenE embeds governance into every workflow. It maintains detailed audit trails of model usage, input data, and decision outcomes. Role-based access control (RBAC), version management, and approval workflows ensure that AI operations remain transparent, traceable, and compliant with enterprise and industry standards.

Scalability and Performance

GenE is designed for production-grade deployment. It supports enterprise-scale automation with high availability, load balancing, and horizontal scalability. Whether running on-premise, in the cloud, or in hybrid mode, GenE maintains consistency, security, and performance across all environments.

Why GenE Matters in the Enterprise Systems

The strength of GenE lies in its ability to unify technology diversity under a single operational architecture. With GenE, enterprises can use the best model for each task, integrate any data source, and evolve alongside a changing vendor ecosystem, all while maintaining governance, scalability, and business alignment.

In a market defined by constant technological churn, GenE gives enterprises stability. It transforms AI adoption from isolated experimentation into a structured, measurable, and scalable transformation, bridging the gap between innovation and operational execution.

By connecting models, databases, and systems into one cohesive framework, it ensures that every AI action is traceable, relevant, and ready for real-world impact.

Key Enterprise Use Cases for GenE

Below are several real-world enterprise scenarios that illustrate how GenE transforms fragmented AI initiatives into cohesive, production-grade solutions.

Knowledge-Base Assistant

Every large enterprise accumulates a massive and diverse knowledge base, training manuals, SOPs, policy documents, service logs, case histories, and product guides. The challenge lies not in storing this information, but in retrieving and using it efficiently. Traditional search tools often fall short, forcing employees to navigate multiple systems or rely on outdated documents.

With GenE, the enterprise can convert this static information into a dynamic, conversational knowledge assistant.

The process begins with document ingestion and vector embedding. GenE, AI orchestration platform for enterprises, orchestrates the workflow, integrating with a preferred vector database (like Pinecone or Milvus), linking retrieval mechanisms, and routing user queries to the most suitable LLMs.

A user can simply ask, “What’s the escalation protocol for equipment failure in Region X?” and the system retrieves the exact, policy-aligned answer, complete with context and traceability. If an LLM update or data source change is required, GenE allows administrators to switch models or databases without overhauling the entire workflow.

Moreover, GenE routes them back to enterprise systems such as CRMs or internal chat interfaces. This ensures that knowledge flows seamlessly between AI systems and business platforms, while GenE continuously monitors performance, usage patterns, and response accuracy to maintain quality over time.

Customer Service Automation

Customer service is one of the most immediate areas where AI orchestration delivers measurable ROI. In a high-volume enterprise environment, customer queries can range from simple account updates to complex troubleshooting or compliance-related requests.

Here’s how a GenE-powered customer service workflow unfolds:

- A customer query enters the system via email, chatbot, or voice input.

- GenE’s orchestration triggers intent classification using an appropriate LLM to identify the nature of the query.

- If the query is routine (e.g., “Reset my password” or “Send my latest invoice”), GenE routes it through retrieval plus generation workflows combining data from CRM, vector databases, and a language model to produce a reliable answer.

- If the query requires human expertise, GenE seamlessly escalates it to a live agent carrying along the full context, previous exchanges, and retrieved information.

This orchestration eliminates repetitive manual intervention while maintaining full auditability. Each transaction, model decision, and data source involved is logged, enabling compliance with service standards and governance policies.

For customer-facing teams, GenE becomes the invisible backbone that powers hybrid human-AI collaboration reducing response times, enhancing accuracy, and improving overall customer satisfaction.

Risk and Compliance Workflows

In regulated industries banking, insurance, pharmaceuticals, or energy, AI cannot operate in isolation. Every recommendation or decision must be explainable, traceable, and compliant with policy frameworks.

Consider a compliance officer who needs to verify whether a contract adheres to internal or regulatory guidelines.

With GenE, this complex evaluation becomes a structured workflow:

- The officer initiates a query such as “Does contract X comply with policy Y?”

- GenE triggers data retrieval from document repositories or contract databases.

- The content is embedded into a vector database and retrieved using similarity search to ensure context-rich analysis.

- The appropriate LLM is invoked to generate a compliance summary, highlighting aligned clauses and potential risks.

- Finally, the output is logged, versioned, and, if risk is flagged, routed automatically to the compliance team for review.

Throughout this process, GenE enforces governance and auditability, maintaining full visibility into which model was used, what data informed the response, and who reviewed or approved the output.

Unlike ad-hoc LLM deployments, GenE embeds accountability at the orchestration level, ensuring enterprises meet the highest standards of explainability and risk control.

Enterprise Digital Transformation

One of the biggest challenges in enterprise AI adoption is that most AI tools remain “add-ons”, operating outside the main digital infrastructure. GenE changes that dynamic by acting as the integration fabric that binds AI capabilities directly into core enterprise systems.

For instance, in a manufacturing company, production data stored in ERP systems, quality metrics in BI dashboards, and maintenance reports in document stores often exist in separate silos. GenE can orchestrate these disparate systems into an intelligent, automated workflow.

A production anomaly flagged in the ERP can automatically trigger:

- Data retrieval from historical maintenance logs (via vector search).

- Root-cause analysis by an LLM trained on operational documentation.

- Generation of a summarized action plan, which is then pushed back into the ERP or maintenance tracking system.

Enterprises benefit from real-time decision support, automated process updates, and a measurable improvement in operational responsiveness.

In short, GenE, an AI orchestration platform for enterprises, turns digital transformation into an operational reality, ensuring that every system, data, model, and process works in harmony to create a more adaptive, efficient enterprise ecosystem.

Final Thoughts

As enterprises transition from isolated AI pilots to organization-wide deployment, orchestration becomes the cornerstone of sustainable success.

GenE delivers exactly that, an intelligent, LLM and Vector DB agnostic framework that unifies data, models, and workflows across the enterprise. It replaces fragmentation with cohesion, ensuring every AI process is traceable, scalable, and compliant.

By embedding enterprise AI governance, performance, and agility into one orchestration layer, GenE transforms AI from a promising concept into a dependable operational advantage, empowering enterprise AI integration to turn intelligence into measurable business outcomes, today and in the future.

Frequently Asked Questions (FAQ)

What does “agnostic” really mean in this context?

A: It means the orchestration layer is independent of the underlying LLM provider and vector-database technology. You’re not locked into one vendor. You can switch models or vector stores without rebuilding the orchestration framework.

Why not just pick one LLM and vector database and build around it?

A: While feasible for a single use-case, enterprises often evolve: new models emerge, performance/cost trade-offs change, new data types appear, and regulations shift. An agnostic orchestration layer gives you flexibility and resilience.

What about vector databases? Why is “agnostic” needed there?

A: Vector DB technology is evolving fast. Some are better for on-premise, others for cloud; some handle multimodal data, some offer specific retrieval features. Being able to plug in or switch vector DBs without rewriting retrieval logic is a major advantage.

What kinds of enterprise systems can GenE integrate with?

A: CRMs (e.g., Salesforce), ERPs (e.g., SAP), data warehouses/lakes, knowledge bases, BI systems, chatbots, custom applications. The idea is to orchestrate across all these systems, plus the AI stack (LLM + vector DB + retrieval + workflows).