As AI adoption increases across industries, there is growing recognition that performance alone is not enough. Enterprise teams, from compliance officers to technology leads, are focused on a more critical question: Can we trust the AI systems we are deploying?

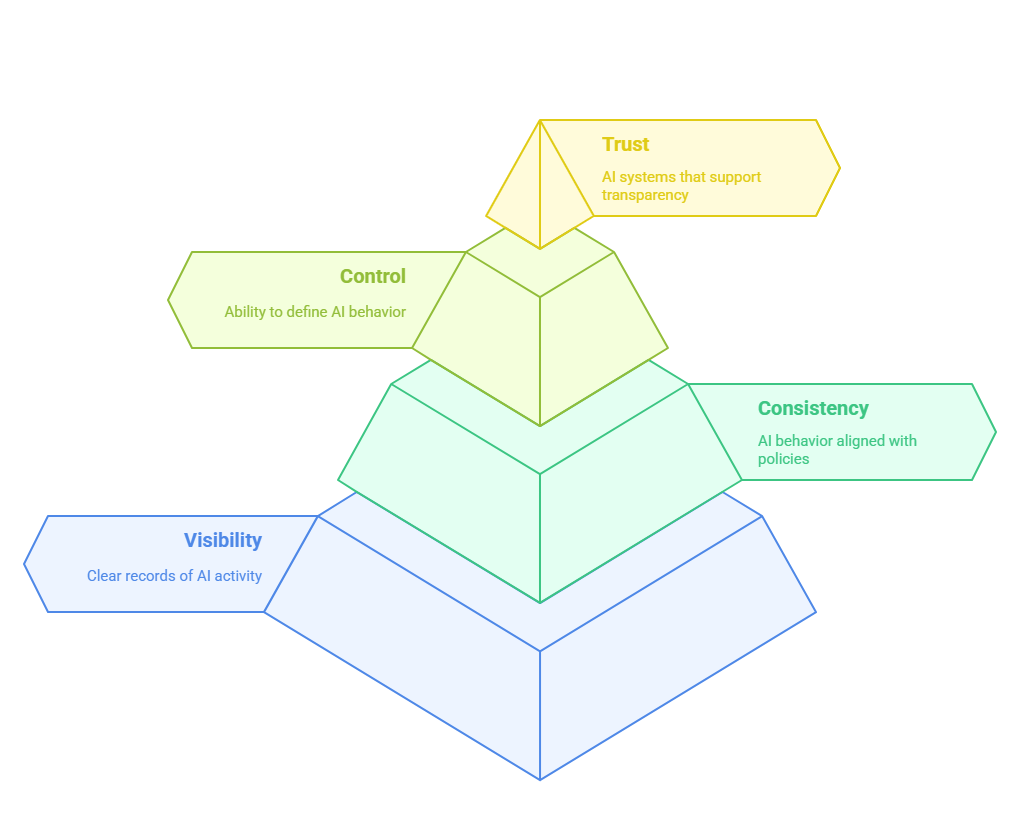

Trust in AI comes from visibility, consistency, and control. It’s not enough for a system to deliver results; it must also show how those results were achieved, what decisions were made, and whether they align with internal policies and external regulations. Responsible AI goes beyond ethical statements or broad goals. It requires concrete mechanisms that ensure accountability at every step.

This includes having clear records of AI activity, the ability to define how AI tools should behave in specific environments, and ways to limit or customize access based on roles and responsibilities. Responsible AI means building systems that support transparency, maintain compliance, and protect business integrity by design.

Today, some AI platforms are enabling this shift by embedding features like AI logging and auditability, guardrails, custom memory controls, and role-based visibility directly into their core. These capabilities give enterprises the operational control needed to adopt AI at scale while ensuring it stays aligned with business values and ethical AI governance standards.

What is Responsible AI?

Responsible AI refers to the practice of designing, developing, and deploying AI systems in a way that aligns with ethical principles, legal standards, and organizational values. It ensures that AI behaves in predictable, explainable, and fair ways, especially when used in enterprise environments where decisions impact people, operations, and compliance outcomes.

In practical terms, Responsible AI includes several key elements: transparency, traceability, accountability, fairness, and security.

These principles guide how AI systems are built and how they behave in production.

Responsible AI is not a one-time check; it is a continuous process that includes monitoring, auditing, and adapting AI behavior as business needs and regulations evolve.

Why Responsible AI Matters for Enterprises

Enterprises operate in environments where scale, compliance, and accountability go hand in hand. When AI is introduced into these systems, whether for customer interactions, internal operations, or decision-making support, it must meet the same standards expected of any enterprise-grade tool.

Responsible AI provides a framework for aligning AI behavior with enterprise expectations. It ensures that AI doesn’t operate in isolation but fits within the existing ethical AI governance, risk, and compliance ecosystem of the organization.

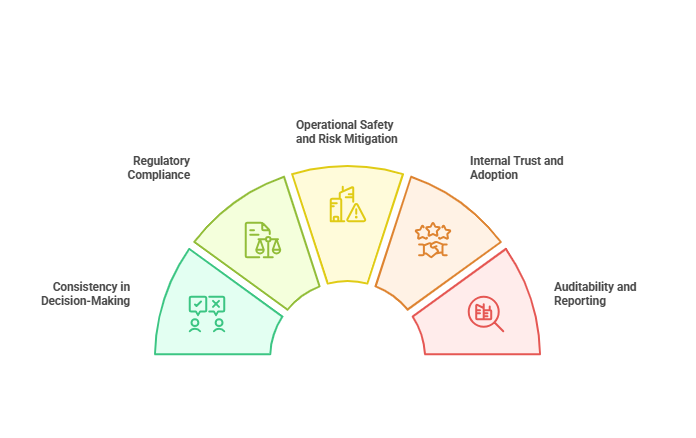

Here’s why it matters:

- Consistency in Decision-Making

- Regulatory Compliance

- Operational Safety and Risk Mitigation

- Internal Trust and Adoption

- AI logging and auditability, and Reporting

Responsible AI transforms AI from a technical tool into a business-ready capability. It becomes easier to scale AI when each use case includes built-in transparency, behavioral controls, and reporting. The ability to define boundaries, monitor activity, and adapt behavior is what allows AI to support, not disrupt enterprise operations.

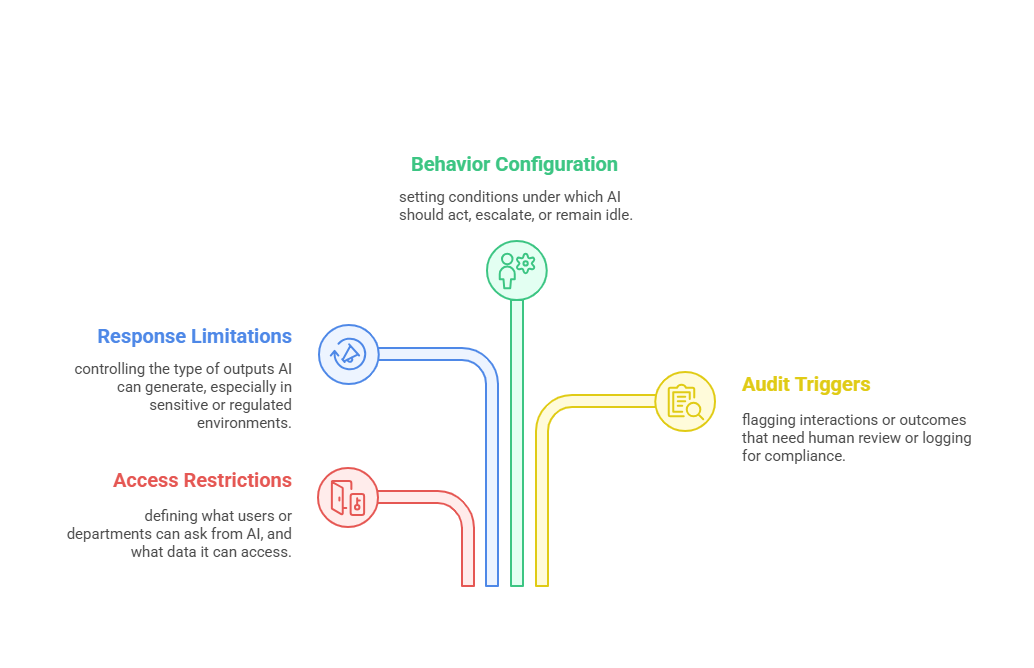

What Are AI Guardrails?

AI guardrails are predefined rules, configurations, and controls that determine how AI systems behave in different situations. In an enterprise setting, they act as safeguards, ensuring that AI operates within acceptable boundaries, follows business policies, and does not take unauthorized actions.

Guardrails can include:

- Access restrictions – defining what users or departments can ask from AI, and what data it can access.

- Response limitations – controlling the type of outputs AI can generate, especially in sensitive or regulated environments.

- Behavior configuration – setting conditions under which AI should act, escalate, or remain idle.

- Audit triggers – flagging interactions or outcomes that need human review or AI logging and auditability for compliance.

These AI guardrails are not about limiting AI’s usefulness; they are about making it safe, predictable, and aligned with business goals. With proper configuration, guardrails give organizations the confidence to let AI handle tasks without constant oversight.

The Foundation – Why Transparency is Central to Trust

Trust in enterprise AI is not optional; it’s foundational. The table below highlights how transparency directly supports trust and operational control:

| Transparency Feature | What It Enables |

| Traceability | Tracks every AI input, output, and action taken, making reviews and audits possible. |

| Explainability | Helps business users and auditors understand how AI arrived at a specific outcome. |

| Operational Clarity | Provides visibility into how AI contributes to day-to-day workflows and processes. |

| Accountability | Assigns ownership and enables oversight by showing who used AI and for what purpose. |

Transparent AI systems reduce uncertainty and build confidence across teams from compliance to operations. They allow organizations to adopt AI with fewer risks and better alignment to business needs.

GenE’s Logging – A Transparent Record of Every AI Action

GenE AI features include an AI logging and auditability system that provides a full trail of how AI is used across your organization. Here’s what gets tracked and why it matters:

| Log Type | What It Tracks | Why It Matters |

| Token Usage Logs | Tracks how many tokens are used per user, task, or function | Helps monitor cost, scale usage, and allocate resources effectively |

| Model-Level Insights | Identifies which AI models (GPTs or others) are being accessed | Ensures the right models are used and helps optimize performance |

| Connector Activity Logs | Shows how GenE AI features interact with tools like SAP, Salesforce, Jira, etc. | Provides visibility into end-to-end automation and system integration |

| App Usage Logs | Monitors which GenE apps or workflows are used most often | Highlights valuable use cases and uncovers adoption trends |

| User Activity Reports | Breaks down AI usage by user, team, or department | Enables accountability, targeted training, and process tuning |

These logs transform GenE from a black box into a transparent AI system, an auditable AI partner, empowering leaders to make data-driven decisions about AI strategy

GenE’s Guardrails – Governing AI Behavior in Real Time

As AI becomes more embedded in enterprise workflows, the importance of responsible control grows. GenE’s AI guardrails provide that control without reducing the AI’s usefulness.

At the core, GenE AI features allow organizations to create layered permissions, behavior rules, and output controls based on their specific risk profile and industry requirements. For example, a finance team can restrict GenE from accessing personal salary data, while the marketing team can configure it to follow a defined tone and brand voice when generating content. These controls can be applied across all users or customized per role.

What sets GenE apart is the real-time flexibility of its guardrails. Enterprises can quickly update rules based on evolving regulations, new business needs, or emerging risks without rewriting code or retraining models. This dynamic setup ensures GenE AI features remain aligned with current policies at all times.In short, GenE’s AI guardrails don’t just prevent errors, they build operational confidence. By shaping what AI can and cannot do, organizations ensure their automation is consistent, compliant, and always under control.

The Synergy – Why Logs and Guardrails Must Work Together

Logs and guardrails each serve a distinct purpose, but it’s when they operate together that AI becomes both accountable and dependable at scale.

| GenE’s Logging System | GenE’s Real-Time Guardrails | Combined Impact |

| Tracks every AI input, output, and interaction | Controls what AI is allowed to access or generate | Enables real-time monitoring and enforcement of responsible behavior |

| Offers visibility into token usage and model activity | Applies behavioral rules and compliance configurations | Ensures both transparency and control over Trust in enterprise AI workflows |

| Highlights adoption trends and usage patterns | Prevents policy violations and sensitive data exposure | Reduces risk while increasing trust across departments |

| Provides audit trails for post-analysis | Guides AI behavior in live environments | Supports proactive ethical AI governance and retroactive accountability |

| Surface inefficiencies or misuse across teams | Adapts to new risks, regulations, or business rules | Creates a closed-loop system for continuous improvement and ethical AI governance deployment |

Together, logs and AI guardrails transform GenE from a powerful AI assistant into a governable, trustworthy enterprise system, one that teams can scale with confidence.

Real-World Applications of GenE’s Responsible AI Framework

Responsible AI isn’t theoretical anymore, it’s a growing expectation. Enterprises are actively integrating frameworks like GenE’s, combining transparent AI system logs with configurable AI guardrails to maintain ethical standards, compliance, and performance. Here are three reliable, real-world application areas where this approach is already making a measurable difference:

1. Financial Services – Enhancing Compliance in Customer Interactions

Leading banks and fintechs are using responsible AI to monitor and govern AI-generated responses in real-time across customer service platforms. With audit logs tracking every conversation and strict AI guardrails ensuring no private or regulatory-sensitive data is exposed, GenE-style frameworks support both customer satisfaction and regulatory alignment.

McKinsey reports that over 50% of financial institutions have adopted AI with compliance-led frameworks to support the responsible scaling of automation.

2. Healthcare – Safeguarding Patient Data During AI-Powered Operations

Hospitals and health tech platforms are using similar models to ensure AI can assist clinicians or automate back-end tasks without breaching HIPAA or GDPR rules. Logging ensures a traceable record of AI decisions, while guardrails prevent unauthorized access or accidental disclosure of sensitive data.

According to Gartner, over 65% of healthcare organizations implementing AI now use transparent AI systems to protect patient safety and data integrity.

3. Manufacturing – Enforcing Safety & Protocols in AI Recommendations

Manufacturing companies deploying AI for scheduling, planning, and shop-floor decisions now embed guardrails to avoid unsafe suggestions and log usage for audit and QA teams. This helps meet industry-specific regulations (like ISO or OSHA) and builds confidence among frontline teams.

Boston Consulting Group highlights that AI success in manufacturing depends on “strong governance frameworks with transparent AI systems and human oversight.”

These examples show that responsible AI isn’t just an idea; it’s a requirement being executed in mission-critical sectors. GenE’s framework of logs and guardrails aligns well with how enterprises are bringing trust and structure into AI deployments.

How GenE Operationalizes Responsible AI

While many organizations talk about responsible AI, operationalizing it is what truly sets them apart. GenE AI features brings responsible AI into everyday enterprise workflows through built-in mechanisms that are both configurable and user-friendly. This isn’t just compliance, it’s applied governance.

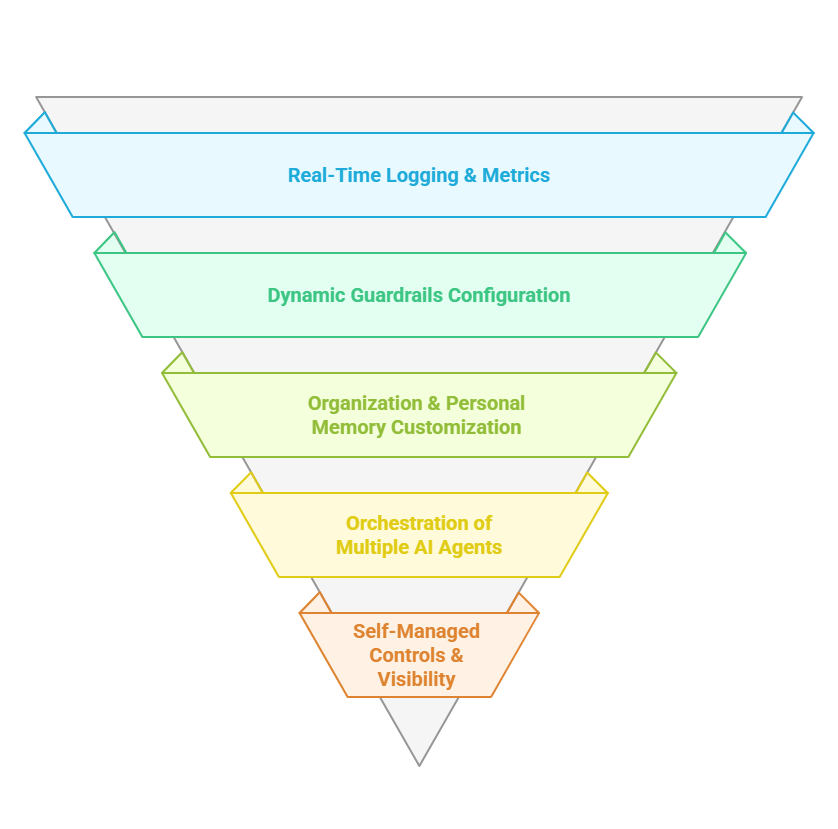

Here’s how GenE puts Responsible AI into action:

- Real-Time Logging & Metrics

Every AI interaction, whether it’s a chat with a connector like Salesforce or a custom GPT completing a finance task, is recorded with time-stamped logs. Enterprises can access data on token usage, model behavior, app performance, and user activity, creating a robust audit trail. - Dynamic Guardrails Configuration

With granular control over AI access and output, organizations can set limits based on role, department, data sensitivity, or use case. Guardrails operate in real-time, preventing potential misuse before it happens. - Organization & Personal Memory Customization

GenE lets teams define what the AI should remember, from user preferences to org-level data patterns, while also controlling what it must forget. This strikes a balance between personalization and compliance. - Orchestration of Multiple AI Agents

Through Agentic AI and orchestration features, GenE AI features ensure that even when multiple AI agents collaborate on a business process (like quote generation or onboarding), every step is logged and governed by predefined rules. - Self-Managed Controls & Visibility

GenE doesn’t just enforce rules; it empowers enterprise users to view, adjust, and improve them. Logs and guardrails aren’t hidden; they’re surfaced in dashboards, giving control back to the business.

Together, these mechanisms ensure GenE isn’t just an AI interface, it’s a governed, intelligent system enterprises can rely on.

Looking Ahead – The Future of Responsible AI

The next phase of Trust in enterprise AI isn’t just about expanding capabilities, it’s about reinforcing credibility. As AI agents become more embedded in critical operations, the pressure to demonstrate ethical usage, AI logging and auditability, and safety will only grow. Responsible AI will shift from being a differentiator to a core expectation, not just from regulators but from enterprise customers, partners, and internal teams. Platforms like GenE, with built-in transparency and guardrails, offer a blueprint for what the future demands.

Here’s what’s coming next:

- Ethical AI Governance Will Be Non-Negotiable

Auditable logs, explainability, and built-in controls like those GenE offers will become standard in every AI deployment, much like data privacy regulations today. - Dynamic Guardrails Will Evolve

Instead of rigid rules, AI systems will adopt adaptive guardrails that evolve with organizational values and real-world usage patterns. - Procurement Will Demand Ethical Proof

Enterprises will favor AI platforms that demonstrate responsible AI in action, especially in regulated industries.

In short, Responsible AI will no longer be a separate layer; it will be part of how Trust in enterprise AI is built and deployed from day one.

Building Trust Through Responsible AI

Trust in AI doesn’t come from what the system says it can do, it comes from what the system proves it does, consistently, and within ethical boundaries.

That’s why trust is built not just through results, but through the structures behind the results: transparency, control, and accountability.

With GenE’s approach, integrating logs, guardrails, preferential memory, and customizable AI agents, enterprises are no longer relying on black-box automation.

They are able to govern AI behavior in real time, track outcomes at every step, and evolve guardrails as business needs shift.

This shift from reactive to proactive ethical AI governance is the cornerstone of enterprise trust. When teams know how AI operates, what data it uses, and how decisions are made, confidence naturally grows.

Responsible AI, when operationalized thoughtfully, becomes the most powerful enabler of long-term adoption.

Conclusion

As AI continues to transform enterprise functions from customer support to procurement, planning to finance, it must also earn the right to operate. That right is granted through transparency, safety, and ethical design, not just performance.

GenE’s built-in logs and guardrails, though never the hero of the story, quietly power that trust behind the scenes. They allow enterprises to deploy AI at scale with confidence, knowing the system is both intelligent and accountable. In the end, responsible AI isn’t just a feature, it’s a foundation.

By weaving transparency into operations and putting governance at the center of AI design, enterprises position themselves not just as adopters of technology but as leaders in the future of trusted automation.

FAQs

1. What are AI guardrails, and why are they important?

AI guardrails are configurable rules and controls that define what AI systems can and cannot do. They ensure safe, ethical, and compliant operation, especially in sensitive or regulated environments.

2. How do logs contribute to responsible AI?

Logs record every AI interaction and decision, creating an auditable trail. This helps enterprises track outcomes, analyze performance, and stay compliant with regulations.

3. How does GenE support real-time Ethical AI governance?

GenE provides live dashboards for logs, token usage, app performance, and more. It allows teams to set guardrails based on role, data type, or process, offering full visibility and control.

4. Can logs and guardrails be customized for different teams or functions?

Yes. GenE’s features support role-based access, department-level preferences, and custom GPTs, allowing governance policies to match the unique needs of each function.

5. Why is responsible AI becoming essential for enterprises now?

With AI being used in core business processes, companies face greater risks around privacy, compliance, and trust. Responsible AI ensures systems remain reliable, transparent, and aligned with organizational values.